Livestreams are not live

Video latency simply explained

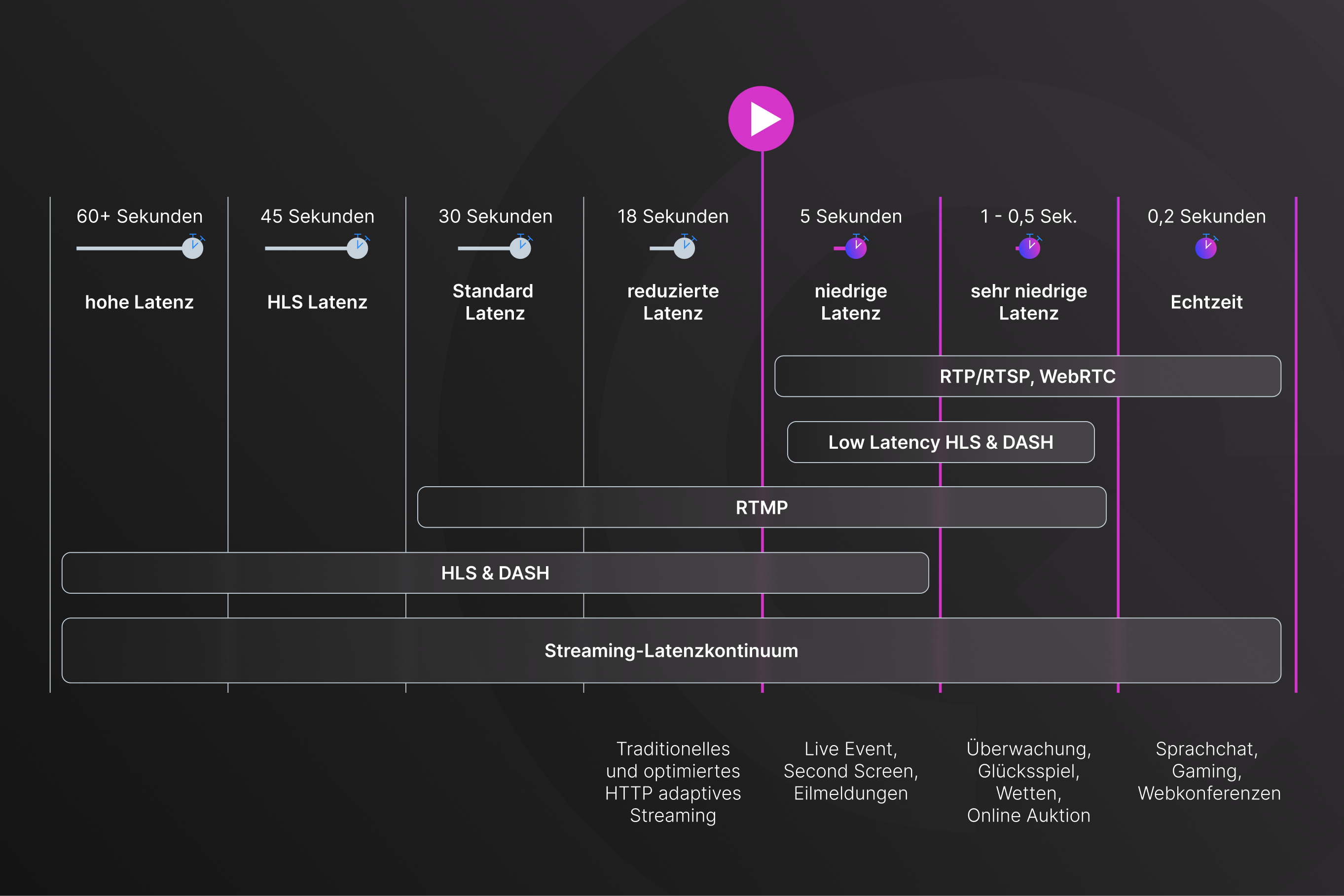

Livestreams are not really live because there is usually a delay of a few seconds between the live event and the video streaming on the viewers' receiving devices. This is called video latency, the delay between the recording of a video and the display of this content to the viewer. Technically formulated: Latency, or the delay in streaming, is the time that elapses between the produced signal on site and the receiver.

Latency occurs in live streams because the processing and transmission of live video takes time. This delay process includes, for example, decoding the incoming stream, generating the individual formats (renditions) for adaptive streaming and again decoding on the viewer's device.

How does the encoding of a livestream work?

A live stream is sent from a source where video is recorded - for example, an encoder behind the vision mixer - using a streaming protocol (usually SRT or RTMP) to a server where the video feed is subsequently processed.

Depending on the setting, the video signal is directly segmented into smaller parts in an HTTP-based streaming protocol, or prepared in different codecs and resolutions to form an adaptive multi-bitrate stream. The latter is necessary to be able to cover as many devices and network fluctuations and conditions as possible.

The compressed and segmented video data is distributed via a content delivery network (CDN).

Effects of high latency

Viewers want to feel that they are watching live broadcasts in real time. After all, they want to be there "live" as if they were there in person. A high delay has a negative impact on the viewer's experience and can lead to the viewer quitting the livestream. These viewers will then usually have a much lower propensity to access other streams from the same source; they are then lost to the content provider.

How does HLS streaming work?

Most HLS streams or HTTP live streaming protocols start with an RTMP ingest that is automatically converted to HLS for delivery by the video platform. This provides viewers with high quality HTTP live streaming, but can result in latency of 30 seconds or more, a huge delay in the live stream.

RTMP is still used for video ingest rather than HTTP live streaming for delivery and ingest, as HTTP livestreaming has much higher latency. The combination of RTMP ingest and HTTP live streaming delivery enables streaming on an HTML5 video player for all devices.

In order to optimise the latency of the livestreams, the streaming protocol HLS has in the past had its segments significantly shortened, and optimisations made to the encoding settings such as shortening the keyframe intervals. With these optimisations, it was possible to reduce the latencies from 18 - 30 and more seconds to 4 - 8 seconds.

3Q enables less than 5 seconds of latency

In order to reduce the latency even further while maintaining the same quality, we are introducing the protocol "Low-Latency HTTP Livestreaming", LL-HLS for short. If you send the signal to us via RTMP, you can reduce the streaming latency to 3 - 5 seconds with the new low latency option. If you use an SRT encoder, a further reduction of the delay is possible.

The big advantage of our solution is that the low latency mode continues to deliver your streams at very high quality and multiple quality levels ("adaptive streaming").

We are constantly working to further reduce latency so that our customers can produce live streams that are transmitted in near real time.

Tags:

Livestreaming

September 22, 2023

Comments